WHY THIS MATTERS IN BRIEF

If you want to create next generation anything, whether it’s AI, aircraft, drugs, energy, healthcare, or materials you need a supercomputer and today China has the advantage but the US want to get back on top.

For the first time in history the US no longer has a supercomputer in the top three most powerful supercomputers in the world and with China threatening to build the world’s first Exascale supercomputer before 2020, and arguably way before the US, the US Department of Energy has decided to award a research grant to HP to develop what they call an “Exascale Supercomputer Reference Design” that’s based on HP’s memory driven Memresistor platform, nicknamed “The Machine,” and between them the hope is that they can “reinvent the fundamental architecture of computing.”

The DoE has historically operated some of the most powerful supercomputers in the world but in recent years China has taken over in dramatic fashion. China’s top supercomputer, the Sunway TaihuLight, for example, currently has five times the peak performance (93 petaflops) of Oak Ridge’s Titan (18 petaflops), and while the US has spoken about retaking the supercomputing crown in dramatic fashion by commissioning the world’s first Exascale (1,000 petaflops, or 1 Exaflop) supercomputer in 2021 the fact is that China, who, allegedly, will have their first prototype Exascale computer built by the end of 2017 and operational by 2020, is running faster, and seems more committed to beating the US at, technically, what used to be its own game.

To create an effective Exascale supercomputer from scratch though you must first solve three problems – firstly the inordinate amount of energy it’ll consume which is in the Gigawatts not Megawatts range, secondly the cooling requirements, which involves re-architecting the interconnects that weave together the hundreds of thousands of processors and memory chips, and thirdly creating an operating system and software that can scale to, and harness, one quintillion calculations per second.

While you can still physically build an Exascale supercomputer without solving all three problems by just strapping together a bunch of CPUs until you hit the magic number it won’t perform any where near a trillion calculations per second unless you solve the software issue, and in any case, without a fundamentally new architecture the new system would also be incredibly expensive to operate.

That said though this is the approach that China is taking – cobble together a huge amount of hardware and then figure out how to use it later.

The DoE, on the other hand, is going down a more sedate path by funding HP, and other supercomputer manufacturers, to create a new reference design that will, they hope, form the foundation for the next generation of Exascale supers. The funding is coming from a DoE programme called PathForward, which is part of its larger Exascale Computing Project (ECP) which was set up under President Obama, and they’ve already awarded tens of millions of dollars to various Exascale research efforts around the US.

So, HP has some money, they have supercomputer skills but what’s their next move, how do they hit that fabled benchmark?

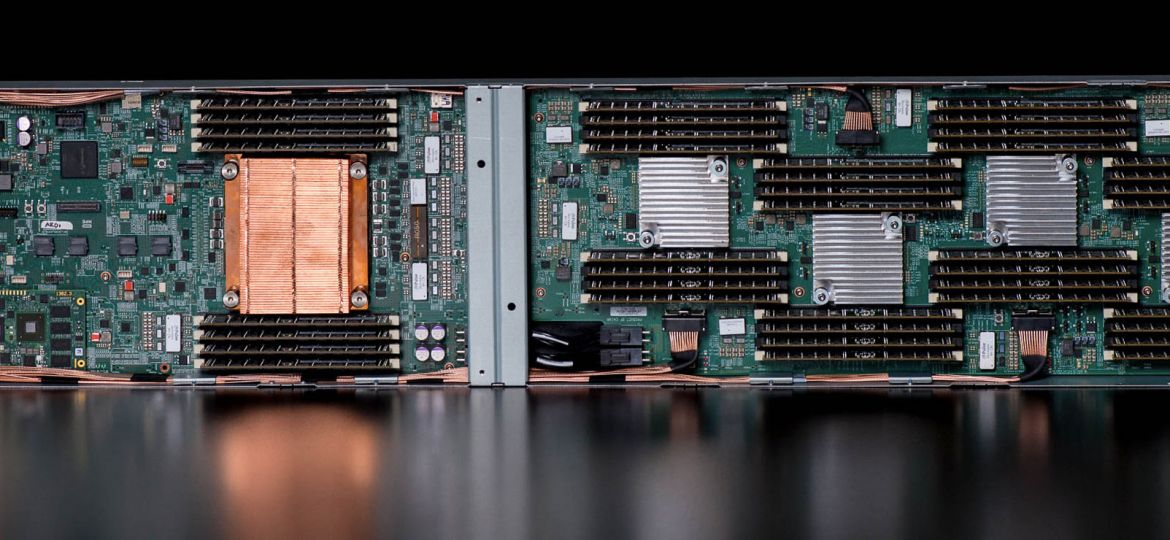

HPE is proposing to build a supercomputer based on an architecture it calls Memory-Driven Computing, which is derived from parts of The Machine. Basically, HP has developed a number of technologies that let computing system harness massive amounts of addressable memory, apparently up to 4,096 yottabytes, or roughly the same number of atoms in the universe, which can be pooled together by high speed, low power optical interconnects that are driven by a new silicon photonics chip. Today though this memory is volatile, but eventually if HP ever commercialises its Memristor technology which it’s been playing around with for years now, or embraces Intel’s latest 3D XPoint technology, it’ll be persistent. And there’s your game changer.

In addition, and perhaps most importantly, HP says it has developed software tools that can actually use this huge pool of memory, to “derive intelligence or scientific insight from huge data sets, such as every post on Facebook, the whole Web, the health data of every human on Earth” they say.

“We believe Memory-Driven Computing is the solution to move the technology industry forward in a way that can enable advancements across all aspects of society. The architecture we have unveiled can be applied to every computing category – from intelligent edge devices to supercomputers,” said HP’s CTO Mark Potter earlier this year.

In practice though we’re probably still some way from realising Potter’s dream but HP’s tech is certainly a good first step on the path to Exascale so we’ll see where they get to. And who knows, with blockchain supercomputers potentially on the horizon, and efforts by Microsoft who are using FPGA’s to turn their public cloud platform into the world’s largest supercomputer, and Nvidia who are using a GPU first approach to build the next generation of high performance low energy supercomputers underway it’ll be an interesting race to watch.

Stay tuned.