WHY THIS MATTERS IN BRIEF

AI, the Genie we’ve let out of the bottle is on the cusp of improving at an exponential rate and we need to talk about the potential consequences.

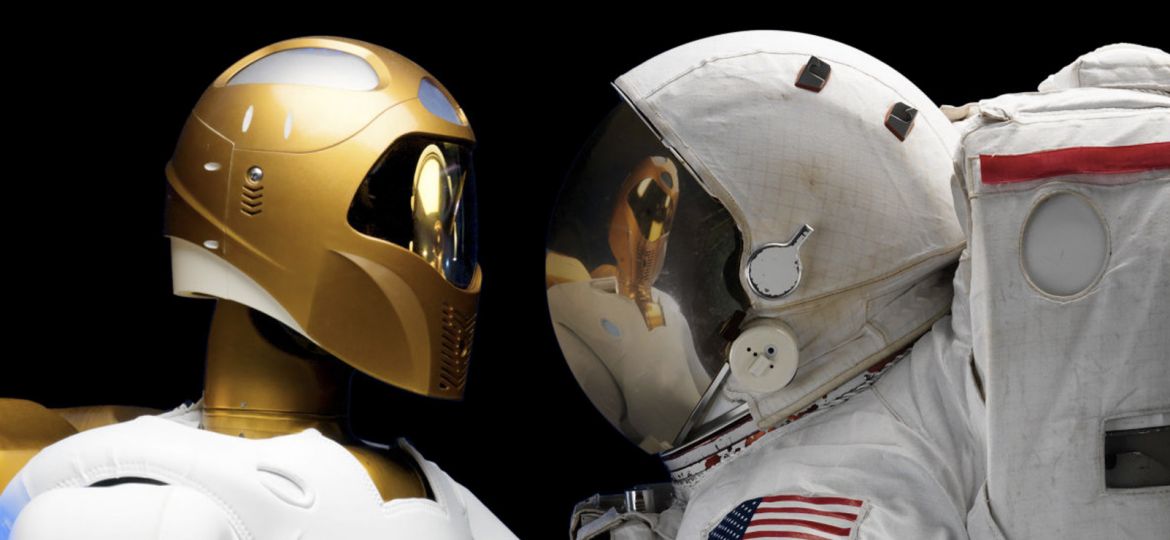

Artificial intelligence is hitting its stride, already giving us machines that can drive themselves, talk to us, fight in our wars, perform our surgeries and beat humanity’s best in a game of Go or Jeopardy.

Recently five companies at the forefront of AI research met to discuss these advancements – all of which have been rapid, few of these solutions existed even five years ago – and figure out how to regulate even more powerful systems in the future – the near future.

Researchers from Facebook, Alphabet, Amazon, Microsoft and IBM are looking at the practical consequences of AI, such as how it will impact transportation, jobs and welfare and while the group doesn’t have a name or an official credo its general goal is to ensure AI research focuses on benefiting people, not harming them.

This isn’t a new battle cry for many AI scientists. In 2015, Elon Musk, Stephen Hawking, the founders of Google DeepMind and dozens of other researchers signed an open letter calling for robust investigations into the impact of AI and ways to ensure it remains a benign tool at humanity’s disposal. But the industry partnership is notable because it represents a renewed, active effort among disparate tech companies – although interestingly, not the regulators – to address some of the ethical and moral issues posed by AI.

The companies are expected to announce the group officially later this month even though the consortium could grow in the meantime since Google DeepMind has asked to participate separately from Alphabet, its parent company.

One of the people involved in the industry partnership, Microsoft researcher Eric Horvitz, funded a paper issued by Stanford University a few weeks ago called the One Hundred Year Study, which discusses the realities of AI and the importance of investigating its impact now. It also calls for increased AI education at all levels of government and outlines how to publish a report on the state of the industry every five years for the next 100 years.

It’s long been known that people in the technology field have been worried about the regulators stymieing their work.

“We’re not saying that there should be no regulation,” said Peter Stone, a computer scientist at the University of Texas, “we’re saying that there is a right way and a wrong way.”

As AI becomes increasingly pervasive, and powerful, it is crucial that we work now to understand its implications before we find out that the Genie we’ve released from the bottle is out of our control, or worse – has its own agenda, whatever that agenda that might be. And as far fetched as that might sound when you realise that AI’s are building AI’s and that robots are learning from each other – albeit at an evolutionary stage today it doesn’t take a huge leap to suddenly realise how fast things could go south for humanity.